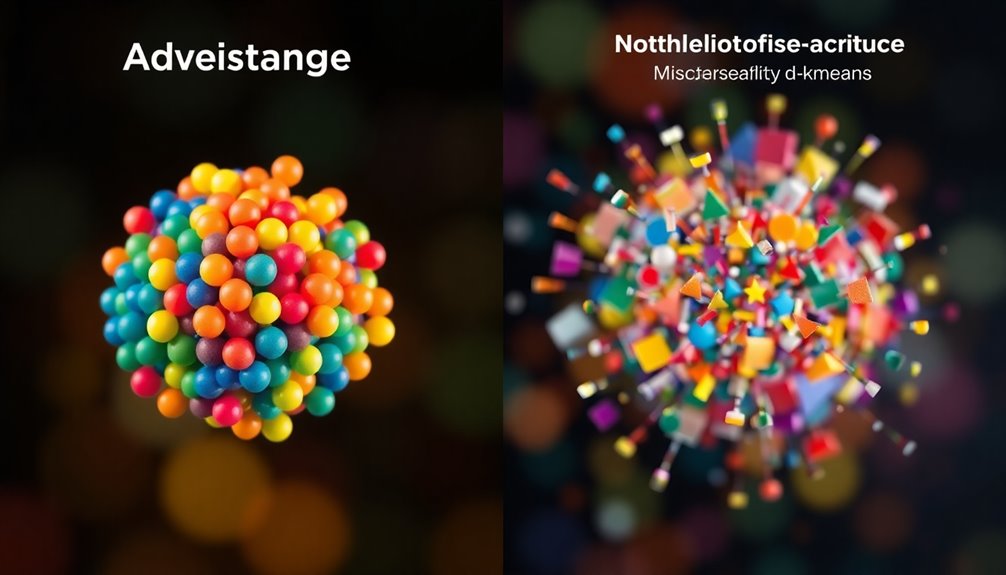

K-Means clustering has both advantages and disadvantages that you should consider. On the plus side, it's efficient, easy to implement, and offers clear visual results, making it great for large datasets. However, you'll need to predefine the number of clusters, which can lead to inaccuracies. It's sensitive to the initial placement of centroids, can misinterpret non-globular shapes, and is affected by outliers. Understanding these factors is essential for effective clustering. As you explore further, you'll uncover more insights about how to optimize your K-Means experience.

Key Takeaways

- K-Means clustering is computationally efficient, making it suitable for large datasets and quick convergence within a few iterations.

- It is simple to implement and understand, making it accessible to non-technical users.

- However, K-Means requires predefined clusters (K), which may not reflect the true data structure.

- The algorithm is sensitive to initial centroid placement, leading to variability in clustering outcomes.

- K-Means assumes spherical and evenly sized clusters, which can be ineffective for non-globular data shapes.

Overview of K-Means Clustering

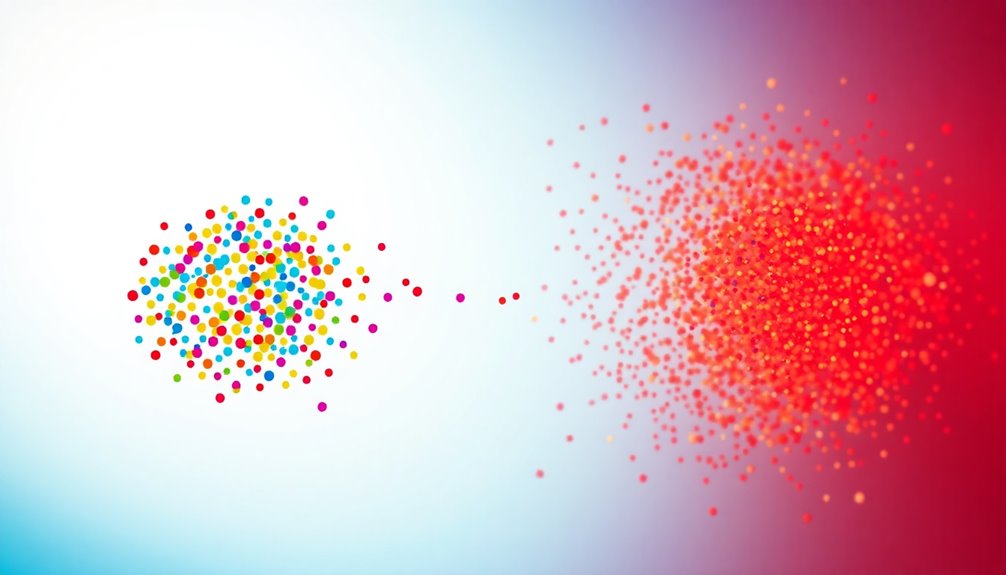

K-Means clustering is a powerful tool for data analysis that helps you group similar data points into distinct clusters. This unsupervised machine learning algorithm partitions data into K distinct clusters based on similarity, with each cluster represented by a centroid.

You start by specifying the number of clusters you want, which is a critical parameter that greatly affects the clustering quality. The algorithm assigns data points to the nearest centroid, and then recalculates centroids iteratively, refining the clusters until minimal changes occur.

While K-Means is effective for identifying patterns in various fields like market segmentation and anomaly detection, it does have its strengths and weaknesses. One major weakness is its sensitivity to the initial placement of centroids, which can lead to different clustering results based on those initial conditions.

This variability can impact overall performance, so it's crucial to carefully interpret the results. Overall, K-Means clustering provides a straightforward approach to grouping similar data points, but you need to be aware of its limitations and the importance of selecting the right number of clusters to guarantee meaningful outcomes.

Key Advantages of K-Means

One of the standout features of K-Means clustering is its computational efficiency, making it ideal for handling large datasets. K-Means offers a simple yet powerful clustering algorithm that's easy to implement and understand, so even if you don't have an extensive technical background, you can gain valuable insights quickly.

The flexibility of K-Means allows it to adapt to various types of data, utilizing different distance metrics beyond just Euclidean distance. This means you can tailor the algorithm to better match the characteristics of your dataset.

When you run K-Means, you'll find that the results are generally easy to interpret; the clusters formed represent groups of similar data points, providing clear visualizations of their distributions.

Additionally, K-Means is known for its quick convergence, typically achieving stable clusters within just a few iterations. This rapid processing enhances its practicality for real-time applications, letting you analyze data more efficiently.

Notable Disadvantages of K-Means

A significant drawback of K-Means clustering is the necessity to predefine the number of clusters, K, which can often feel arbitrary and may not truly represent the underlying data structure. This requirement means you might end up with clusters that don't accurately reflect the data you're analyzing.

Additionally, the K-Means algorithm is sensitive to the initial placement of centroids. If your starting points are poorly chosen, you could get different clustering results or converge to local minima, leading to suboptimal configurations.

Another major disadvantage is that K-Means assumes clusters are spherical and evenly sized. This limitation makes it ineffective for datasets with non-globular shapes or varying densities. Outliers further complicate matters, as they can skew the position of centroids and distort overall cluster quality, negatively impacting your results.

Finally, the algorithm doesn't guarantee finding the global minimum, especially in complex datasets containing multiple local minima. This can result in non-optimal solutions, which can be frustrating when you expect K-Means to provide clear, meaningful insights from your data.

Real-World Applications

Despite its drawbacks, K-Means clustering finds practical applications across various industries. One of its most significant uses is in customer segmentation, where businesses leverage K-Means to identify distinct groups based on purchasing behavior and demographics. This capability aids in crafting targeted marketing strategies, ultimately enhancing customer engagement.

In the domain of data analysis, K-Means excels at dimensionality reduction, helping you simplify high-dimensional data while still uncovering fundamental patterns in data. It's also widely used in image processing for color quantization, allowing for effective image compression without sacrificing visual quality.

Additionally, K-Means plays an essential role in document clustering by organizing large text corpora into meaningful groups, improving information retrieval and management. It's particularly valuable in anomaly detection, where it identifies outlier data points that deviate from the norm, making it indispensable in fields like network security and fraud detection.

In bioinformatics, K-Means helps analyze gene expression data by grouping similar gene profiles, enabling researchers to uncover patterns and relationships in biological data. Furthermore, understanding narcissistic behavior can enhance the effectiveness of K-Means in customer segmentation by identifying distinct consumer needs influenced by emotional factors.

Choosing the Number of Clusters

Choosing the right number of clusters (K) is vital for achieving meaningful results in K-Means clustering. The ideal K directly influences clustering quality, as too few clusters can lead to underfitting while too many might cause overfitting. You want to find a balance where your clusters of similar data points are well-defined without being overly complex.

One effective technique is the Elbow Method. By plotting the within-cluster sum of squares (WCSS) against various K values, you can identify the "elbow" point, where adding more clusters yields diminishing returns. This visual cue helps you determine the appropriate number of groups.

Additionally, you can use the Silhouette Score to evaluate how well-separated your clusters are. A score closer to 1 indicates that objects are more similar to their own cluster than to others, which is what you want.

Experimenting with multiple K values and leveraging domain knowledge can further refine your selection. Validation techniques like cross-validation also play a vital role in ensuring that the clusters you choose are ideal for your specific dataset.

Implementation Considerations

When implementing K-Means clustering, you'll want to focus on effective data preprocessing techniques to guarantee your features contribute equally.

Choosing the best number of clusters is essential, and you'll likely find methods like the Elbow Method helpful in this regard.

Finally, don't overlook computational efficiency strategies to streamline your model fitting process and achieve better results.

Data Preprocessing Techniques

Effective data preprocessing techniques are essential for achieving ideal results with K-Means clustering. First, you need to guarantee that all features contribute equally to distance calculations by applying normalization or standardization. This step markedly enhances clustering accuracy.

Handling missing values is also critical; you can either impute or delete them, as K-Means relies on complete datasets for accurate centroid calculations.

Next, consider outlier detection and removal. Since K-Means is sensitive to outliers, their presence can skew centroid positions and distort cluster formation. Removing these outliers can lead to more reliable clustering results.

Additionally, employing dimensionality reduction techniques like PCA (Principal Component Analysis) helps simplify the data structure, reducing computational complexity and improving performance before you apply K-Means.

Finally, feature selection plays an important role. Irrelevant or redundant features can diminish clustering quality, so it's important to select the most informative features.

Optimal K Selection

Determining the ideal value of K is essential for achieving meaningful clustering results. The best K greatly influences your clustering outcome; too few clusters can lead to overlooked data patterns, while too many can cause overfitting.

One effective technique for selecting K is the Elbow Method. By plotting the within-cluster sum of squares (WCSS) against various K values, you can identify the point where the rate of decrease sharply changes, suggesting a suitable number of clusters.

Additionally, cross-validation allows you to assess the stability of your clusters across different data subsets. This helps guarantee that your chosen K is robust.

Don't forget to leverage your domain knowledge, as it provides valuable context for determining the appropriate number of clusters based on expected groupings.

It's also wise to experiment with multiple K values. Evaluate your clustering results using metrics such as the silhouette score or Davies-Bouldin index to gauge the compactness and separation of the clusters.

Computational Efficiency Strategies

To enhance the computational efficiency of K-Means clustering, consider implementing key strategies that streamline the process. First, utilize the Scikit-Learn library, which simplifies the implementation of K-Means clustering, allowing you to set up and execute the algorithm with minimal coding effort. This efficiency is vital, especially when working with large datasets.

Next, focus on preprocessing the data through normalization and standardization. By ensuring that all features contribute equally to the distance calculations, you can greatly improve clustering performance.

Additionally, applying the Elbow Method will help you identify the ideal number of clusters (K). This involves plotting the within-cluster sum of squared distances (WCSS) against various K values, which aids in better model fitting.

Lastly, consider leveraging parallel processing and distributed computing. These techniques can greatly enhance the performance and scalability of the K-Means algorithm, particularly when handling extensive datasets.

Advanced Techniques and Tips

While many people may rely on basic K-Means clustering techniques, incorporating advanced strategies can greatly enhance your clustering results. Start by using the Elbow Method to determine the ideal number of clusters (K). This method analyzes the within-cluster sum of squares (WCSS) to find where adding more clusters offers diminishing returns.

Additionally, normalize or standardize your data beforehand. Doing so guarantees that all features contribute equally to distance calculations, improving clustering outcomes. Advanced centroid initialization methods like K-Means++ can also help, making the algorithm less sensitive to initial placements and speeding up convergence.

Consider employing dimensionality reduction techniques, such as PCA, to simplify complex datasets. This reduction minimizes noise and can lead to better clustering results. Finally, hybrid approaches that combine K-Means with hierarchical clustering can yield robust solutions by leveraging the strengths of both techniques.

Here's a quick summary of these advanced techniques:

| Technique | Purpose | Benefits |

|---|---|---|

| Elbow Method | Determine ideal K | Identify diminishing returns |

| Normalization/Standardization | Equal feature contribution | Improved distance calculations |

| K-Means++ Initialization | Enhance centroid placement | Faster convergence |

| Dimensionality Reduction | Simplify datasets | Better clustering outcomes |

Frequently Asked Questions

What Are the Advantages and Disadvantages of K Means?

When you consider K-Means clustering, you'll notice several advantages and disadvantages.

It's efficient, handling large datasets easily, and it's simple to implement, making it great for beginners. You can interpret the results quickly, as each cluster has a clear centroid.

However, you need to pre-specify the number of clusters, which can lead to less ideal results if your choice doesn't match the data's true structure.

What Are the Disadvantages of K?

When using K-Means, you might face several disadvantages.

First, you need to pre-specify the number of clusters, which can lead to poor results if you choose incorrectly. The algorithm's sensitivity to initial centroid placement can also produce varying outcomes.

Additionally, K-Means assumes spherical clusters, making it less effective for irregular shapes.

Finally, outliers can skew your results, so you might need to preprocess your data for more accurate clustering.

What Are the Pros and Cons of K Means?

When you consider K-Means clustering, you'll find several pros and cons.

On the plus side, it's computationally efficient and easy to implement, making it ideal for large datasets. You can quickly visualize and interpret the clusters.

However, you have to pre-specify the number of clusters, which can lead to less-than-optimal results if chosen incorrectly.

What Are the Disadvantages of Nearest Neighbours Analysis?

When you use nearest neighbors analysis, you might face several disadvantages.

First, it's computationally intensive, especially with large datasets, leading to lengthy processing times.

It's also sensitive to the distance metric you choose, which can skew your results.

If your dataset has class imbalance, it may favor the majority class, causing biased predictions.

Additionally, irrelevant features can distort distance calculations, and it doesn't handle missing values well, requiring extra preprocessing steps.

Conclusion

To summarize, K-Means clustering offers a straightforward approach to data segmentation, making it popular for various applications. However, it's crucial to weigh its advantages against notable disadvantages, like sensitivity to initial conditions and the need to specify the number of clusters. By understanding these factors, you can effectively implement K-Means in your projects. Remember to explore advanced techniques and tips to enhance your clustering results and make informed decisions that suit your specific needs.